Did We Lose Something With The Adoption Of Containers? And Can We Get It Back?

The subject of containers probably doesn’t need much of an introduction. Since the launch of Docker in 2013 containers have become almost ubiquitous, with 89% of IT Professionals confirming their organizations used containers in some way during 2019.

That is no surprise. Docker itself calls the container “a standardized unit of software”, which is a nice way of saying that a typical container packages up all the relevant code, system tools, dependencies and libraries within an application, and effectively isolates that application from the rest of the environment and infrastructure.

As a result, containers have one great merit: they will reliably run, and run in the same way, wherever they are deployed. At a stroke, they eliminate the “well it’s working on my machine” problem that has caused endless heartache for both development and operations teams over the decades.

That benefit should not be under-estimated. For developers in particular, it is rather wonderful to be able to put everything into a container and throw it over the wall knowing that it is going to deploy, and in turn knowing that it isn’t going to come back over the same wall for round 2.

It’s also - at least in theory - good for the operations team as well, for all of the same sorts of reasons.

But it isn’t all good news.

What Is A “Unit” Of Software, Really?

Prompted by the Docker definition of container I mentioned above, it might be instructive to ask ourselves whether the container is either the only, or indeed the best, ‘unit’ of software.

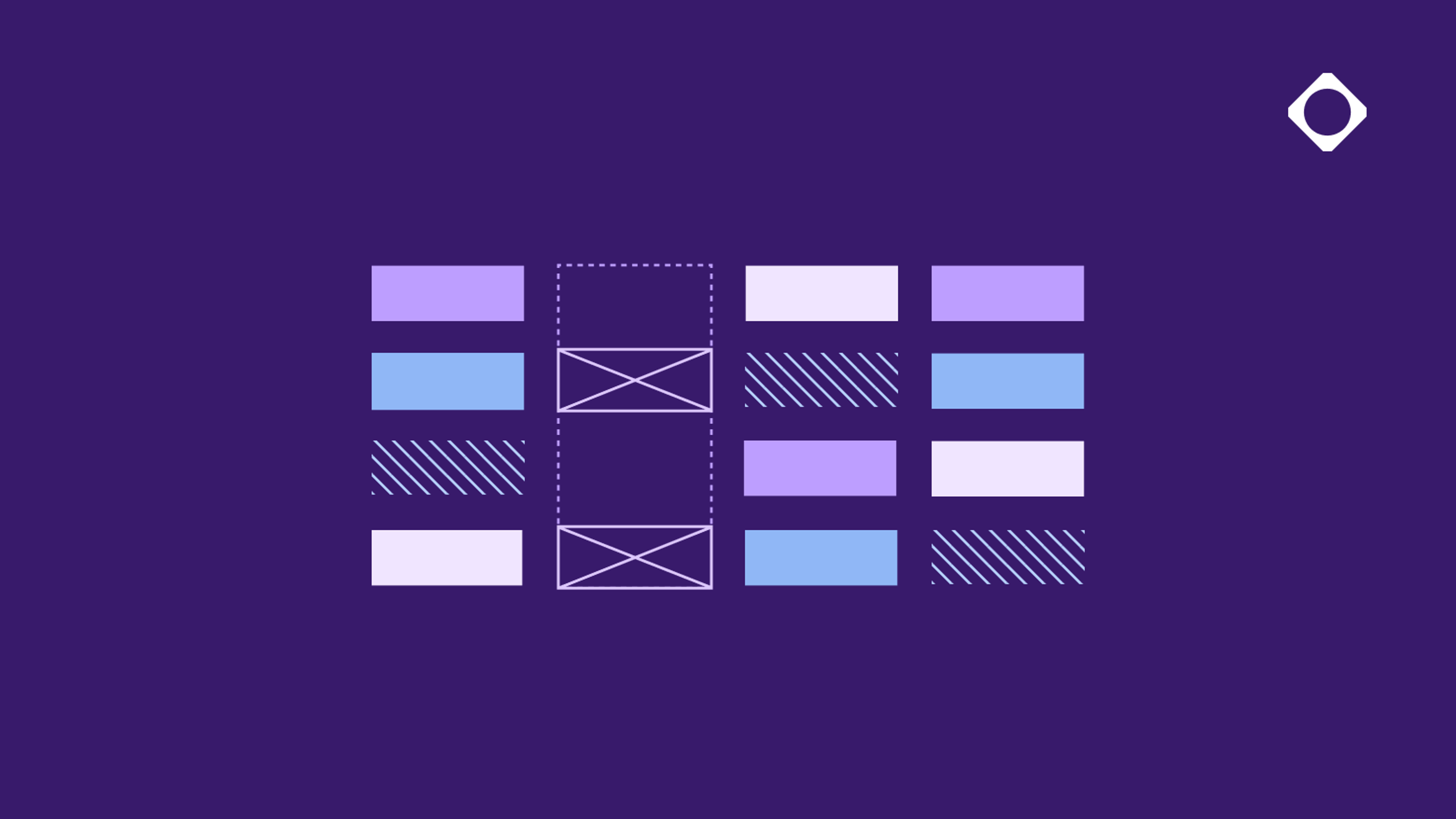

If we take a 10,000 ft view of the history of software development it probably looks something like this:

- The entirety of the source code is the unit

- The package is the unit

- The container is the unit

In the old days of source code you really had to be on top of things. The whole thing stood up or fell over as a whole, and things were pretty painful and expensive if (when) it did the latter.

When the package became the unit, things improved. Certainly, if we were following good package management and distribution best practices, then in most cases things fell over less, and were easier to fix when they did. I’ll talk more about this later, but let’s also remember that packages also help speed up development full stop as they enable us to more easily re-use code and services.

In the move to containerization, the third stage in our evolution, the ‘unit’ of software has got bigger. As it has done so, it has concealed complexity in order to enable that crucial isolation from the environment it runs.

Essentially, the container runs as a kind of black box. The operations team just need to know what it does. They don’t need more detailed metadata and they don’t need to know what is inside it. After all, it’s all one self-contained package. So the unit of sofware has not only got bigger, it has also become less transparent.

The Problem With Being Big And Opaque

The problem with a large unit size is that within it are likely to be hundreds if not thousands of dependencies.

And the problem with being opaque is that we don’t know what they are.

What happens when something goes wrong? Let’s imagine (and this won’t take too much effort for veterans of LeftPad, HeartBleed, Event-Stream or one of any number of dependency related screw-ups) that a vulnerability is discovered within a certain package.

Where is that package currently in use in our ecosystem? We don’t know. Can we quickly roll that package back to a known ‘safe’ version wherever necessary? No.

Containers - as a ‘unit’ of software - live or die as a whole. If something is wrong, or might be wrong, we have no option other than to spin up a new version and redeploy: not necessarily a simple task. And as we don’t necessarily know which containers are affected, we find ourselves struggling to get on top of an emerging security crisis based on limited data. Not a nice place to be.

The Lost Art Of Package Management

My last point is this: that containerization has led to a general decline in diligence and observance of best practices when it comes to handling packages and dependencies. After all, if I am going to throw them all into one container and check if it runs does it really matter where I get packages from?

The short answer is yes.

The longer answer is yes, because if we aren’t going to be sure what is in any given container further down the line, we should be as careful as we possibly can be that nothing we can’t stand over sneaks in now.

Unfortunately, that doesn’t always happen. The path of least resistance is to get dependencies from pretty much anywhere and live with the consequences later. And as the developer cares about speed, and the ops team will be the ones dealing with those consequences, there is all the more reason to cut corners.

As developers, we need to get back to being diligent - and consistently diligent - about questions of security, provenance, reliability and availability when it comes to packages and dependencies. We need to be as sure as we can be that what we integrate into our projects (whether destined for containers or not) is what it says it is. And we need to know what we used where and when - so that we can fall back to a previous version quickly and easily if necessary.

It’s the least our colleagues in operations, and the users of our end product, deserve.

More articles

Why cloud migrations are the best time to re-evaluate your artifact management

AI artifacts: The new software supply chain blind spot

Access control & permissions for multi-format repositories

The true cost of legacy artifact management