Adding AI to applications using the Model Context Protocol

Large Language Models (LLMs) are now at the cutting edge of mainstream AI systems. Their impact has been seismic, sparking a new gold rush as application developers transform the user experience away from clicks and commands into natural language and advanced automation

However, application developers have a barrier to overcome. AI models need data to reason and respond to a particular application domain. Say, for example, the user of an artifact management platform needs to pose a simple question, ‘What packages are trending upward in usage?’ To answer this question, the AI needs to be trained on package data, including usage data.

The MxN Problem of LLM Integration

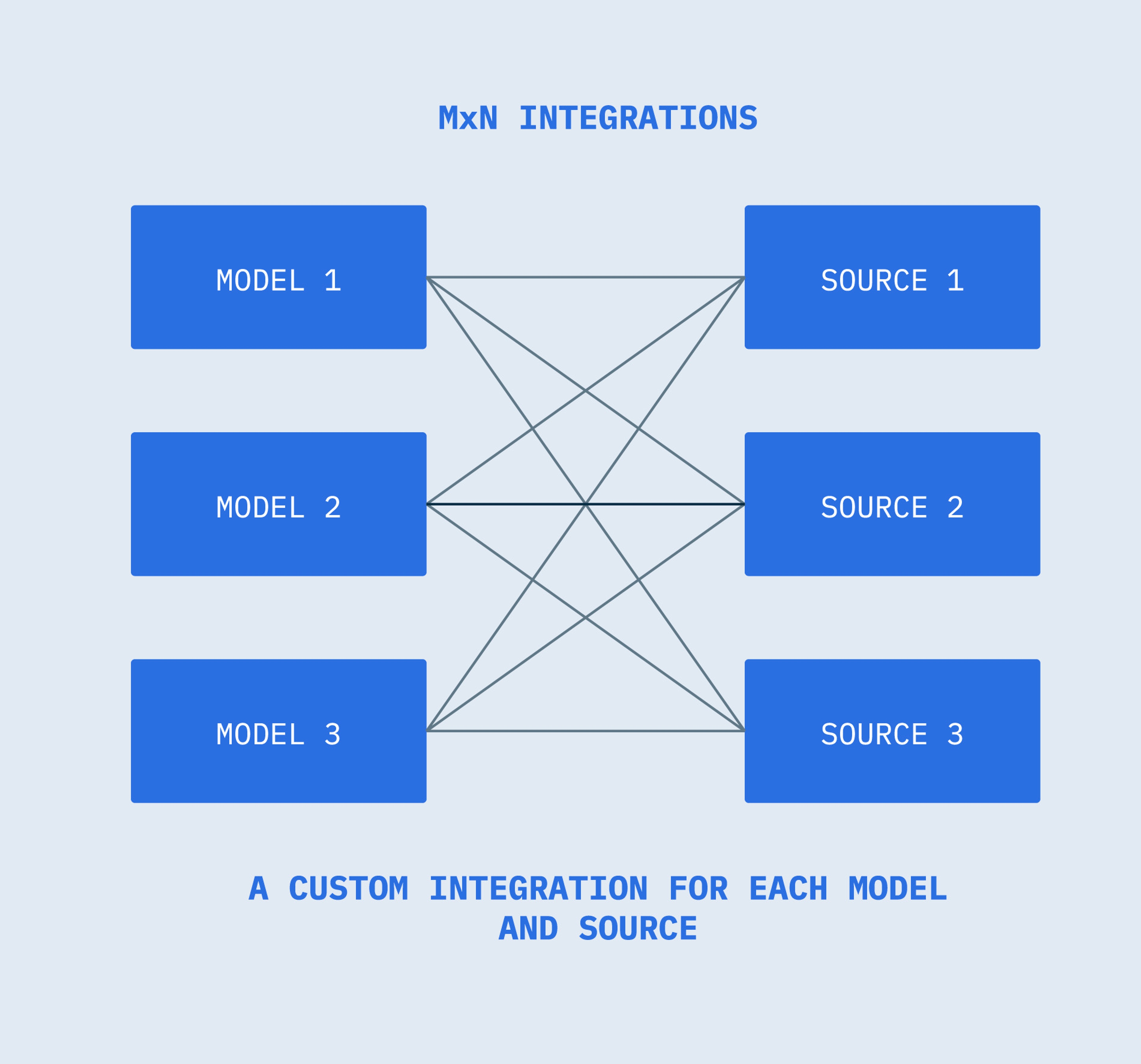

In general, an AI model's capabilities depend on the information, or context, on which it is trained. And here is the problem - AI models tend to be isolated from data. Getting data into the AI model is not trivial. The data is often behind information silos and legacy systems. Integrating models usually involves a custom implementation with bespoke connectors.

It’s as if each time you plugged a device into a computer’s USB port, you had to configure the connection on a per-device basis. And so it is with AI models. The implementation has to be done on a per-data source, per-AI model basis, each time needing its own connector, protocol, and security checks. This multiplies the work involved in integration and tethers the LLM to a particular source, making it difficult to scale.

This multiplicity of connectors between each model and each source has been termed the MxN problem. Every model (M) needs a custom integration for every source or service (N), creating a multitude of one-off adapters that create a build and maintenance headache. As more models and tools enter the ecosystem, the integration load grows exponentially.

MCP: One Protocol to Rule Them All

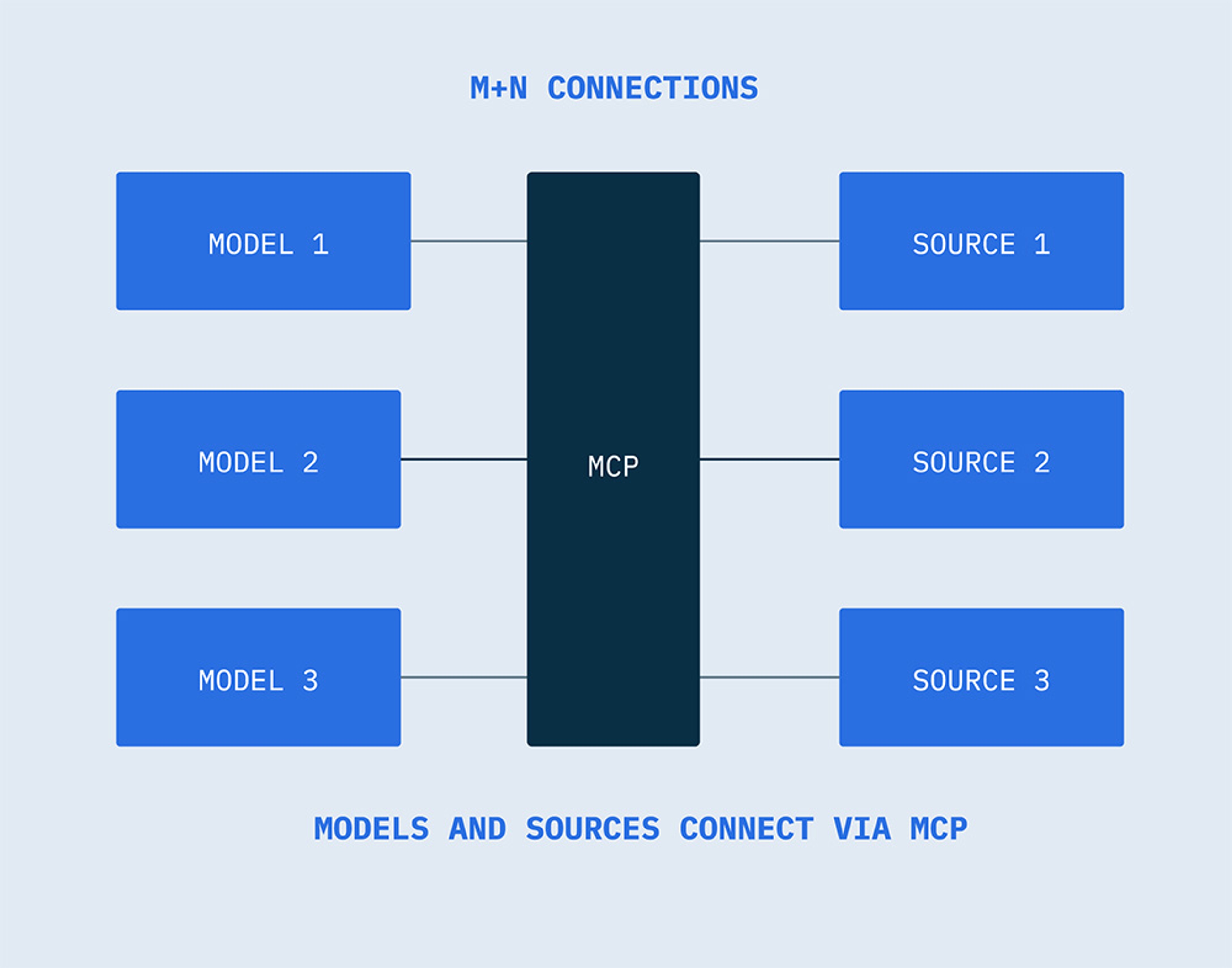

Luckily, Anthropic devised a solution to solve the mess of custom integrations that results from the MxN approach. The Model Context Protocol (MCP) is an open standard that provides a universal way of connecting AI models to external data sources and systems.

MCP provides a common interface, regardless of data source, eliminating the need for custom integrations for models and sources. The use of MCP as a middle layer reduces the MxN problem to a much more scalable M+N, as each model and source only has to integrate with MCP.

The USB-C port analogy is often used for MCP, as it, too, uses a single connectivity standard to provide a universal way to connect different devices. In fact, any common protocol has the same kind of utility. HTTP created a universal language for web browsers and servers to interact. MCP enables interoperability across platforms like OpenAI, Anthropic, Google DeepMind, and Microsoft, giving AI assistants access to tools and repositories in a secure and standardized way.

How Does MCP Work?

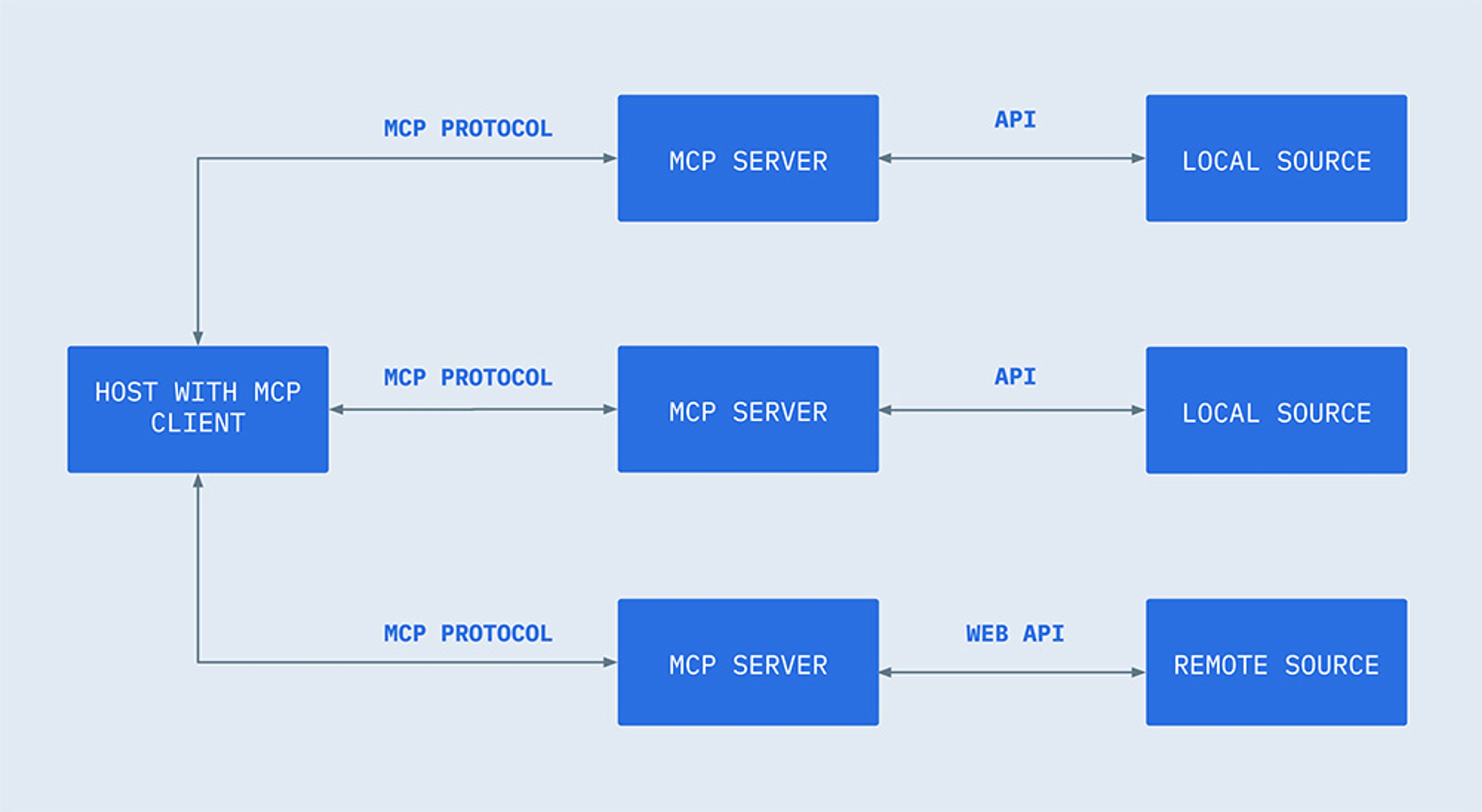

MCP is built using a client-server architecture, and works as follows:

- The AI model (host) uses connectors (clients) to send requests for information or actions using MCP to an MCP server.

- The server receives the requests, acting as an abstraction layer in front of the data source, exposing a controlled set of functions.

- The server then translates the model’s requests into safe, scoped operations. This means data is accessed securely and consistently, and the model doesn’t need to know server implementation details for each data source.

- The server returns the result using MCP.

The server capabilities are exposed via JSON-ROC methods, which are mapped to one or more internal database queries or functions. These could include file lookups, data queries, transformation functions, or any other tool-like operation.

The AI model can introspect the available methods and call them with specific parameters. The server authenticates the request and calls the appropriate backend query using its own connection to the database. A structured response is then returned to the model. This completely decouples the database logic from the AI model.

Security in MCP

MCP provides powerful capabilities through code execution and data access. To address this, MPC includes a series of recommendations for best practices for securing data within your infrastructure.

These best practices are shaped around four key principles:

- User Consent and Control - Users must explicitly consent to and understand what data is accessed and what actions are performed. Implementations should offer clear UI elements to authorize and review activities.

- Data Privacy - Resource data must not be transmitted or exposed without user approval. All access must be explicitly granted and protected through proper controls.

- Tool Safety - Since tools may trigger arbitrary code execution, hosts must treat them with caution and verify trust. Descriptions alone should not be trusted unless verified, and execution must be explicitly authorized.

- LLM Sampling Controls - Any use of LLM sampling must be user-approved. Users should be able to control whether sampling occurs, what prompt is sent, and what response visibility the server has.

MCP is unable to enforce these security principles; that is the responsibility of developers. And as a rule, they should follow these guidelines as outlined in the MCP spec:

- The application should use robust flows for consent and authorization

- All security implications must clearly be outlined in documentation

- Appropriate access controls and data protection must be in place

- Follow all security best practices when building integrations

- When designing features, always consider privacy implications

Some diligence is required to ensure appropriate security controls are in place.

MCP: An Emergent Standard

As explained in the MCP documentation, MCP provides a standardized way to:

- Share context (information) with LLMs

- Expose tooling and capabilities to AI systems

- Build modular integrations, composed of task-specific capabilities (such as retrieving metadata, or querying an audit log) that can be chained into larger, reusable workflows

- Easily switch between LLMs

- Approach data security within your infrastructure

These attributes and Anthropic’s credentials have seen it quickly gain traction. Despite its release in 2024, MCP is becoming an industry standard and is now supported by OpenAI. There’s already a long list of pre-built MCP integrations that can be plugged into LLMs.

Using MCP and AI in Artifact Management

Cloudsmith has built a prototype MCP server as a step towards LLM and AI agent interaction with the artifacts and data in your Cloudsmith workspace. This could radically transform how you can control your software supply chain. It would replace potentially time-consuming command-line or UI workflows and provide fast and intuitive control with natural language prompts.

For example, ask questions like “List all versions of a particular library in Cloudsmith.”, “Tell me how many packages I have in Cloudsmith with a high CVE or that would violate a certain policy.”, or “If I reduce retention from 90 to 30 days, what will be deleted? How will that impact my allowances?”.

The image below shows an example from our proof-of-concept MCP server Claude integration. We asked, “What repository contains the Ubuntu 14.04 Docker image?”

Cloudsmith is in the early stages of this research, but our initial prototype is showing huge promise. For more information and examples, see our MCP server feature announcement.

The Future of Artifact Management

MCP clearly represents an exciting opportunity for application developers to plug into AI in a scalable and economical way. Cloudsmith has started this journey with our MCP server. DevOps and developer teams will soon be able to use intelligent agents to automate, assist, or enhance their artifact management and software supply chain workflows. This is potentially transformative and may well become the future of artifact management.

If you'd like to register for early access to the Cloudsmith MCP server, click here.

More articles

Kubernetes 1.35 – What you need to know

Python 3.14 – What you need to know

Migrating from Docker Content Trust to Sigstore

Kubernetes 1.34 – What you need to know