AI generated code is changing the demands being put on artifact management

Artificial intelligence has moved beyond a futuristic concept to a daily collaborator for almost all development teams today. Tools like GitHub Copilot and ChatGPT are writing functions, suggesting dependencies, and building application skeletons at a blistering pace. This revolution in speed is undeniable, but it comes with a critical, often-overlooked consequence: AI-generated code is fundamentally changing the demands on artifact management.

We're moving faster than ever, but that speed has created massive blind spots in our software supply chain. The core question is no longer just "Can AI write this code?" but "Should we trust the code AI writes?"

Does AI check its sources and provide provenance checks?

Let's address the first fundamental question. When an LLM suggests a software package or a new dependency, does it perform any provenance checks?

The short answer is no.

An AI model doesn't "know" if a dependency is credible, accurate, or safe. It's not testing that package in a sandbox. It's making a statistically probable recommendation based on the data it was trained on.

This creates a terrifying new attack vector. The AI has no concept of:

- Typosquatting: An LLM might just as easily suggest fabrice instead of fabric, pulling in a malicious package. Oftentimes it depends on what you ask for, and sometimes you get exactly what you asked for - whether that’s a good package or not.

- Malware: AI can't scan a dependency for malware before recommending it. After the ‘s1ngularity’ and ‘shai-hulud’ attacks on the NPM ecosystem, there is a heightened focus on understanding the contents of open-source packages that we consume from these upstreams.

- Credibility: AI doesn't differentiate between a well-maintained library from a trusted foundation like the CNCF vs. an abandoned, vulnerable package from a random user that likely will not be actively maintained going forward.

If a developer accepts that suggestion, a compromised dependency is instantly pulled into the codebase, bypassing all traditional human vetting.

Typosquatting

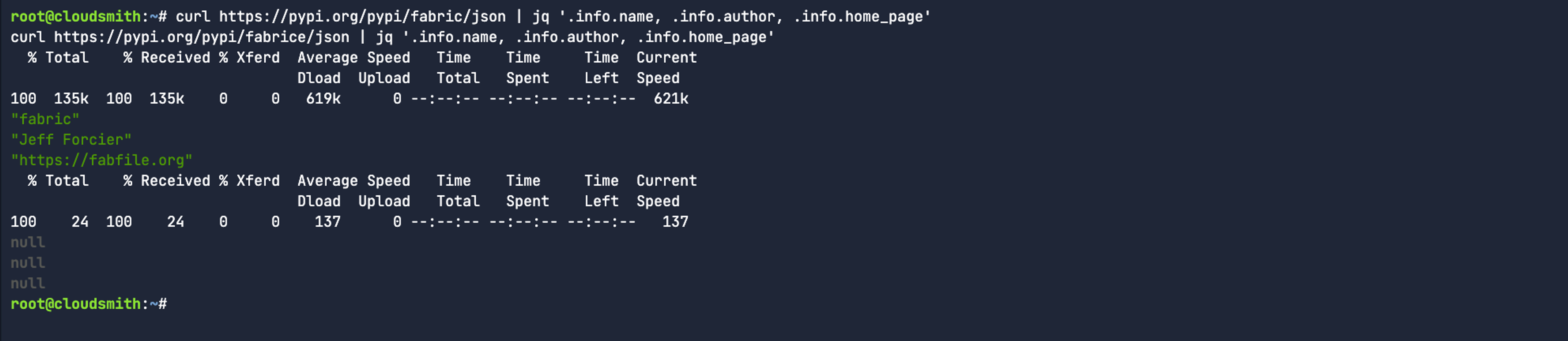

Typosquatting is a type of cybercrime where attackers register domain names, or in this case dependency names, that are common misspellings or slight variations of legitimate websites/packages/dependencies to trick users into visiting a malicious site or downloading a software dependency that ultimately contains malware. A perfect example is the fabric vs. fabrice example we mentioned earlier. One extra letter and many users wouldn’t immediately recognise it as a typo. Let’s query PyPi to see the owner of both packages:

curl https://pypi.org/pypi/fabric/json | jq '.info.name, .info.author, .info.home_page'

curl https://pypi.org/pypi/fabrice/json | jq '.info.name, .info.author, .info.home_page'

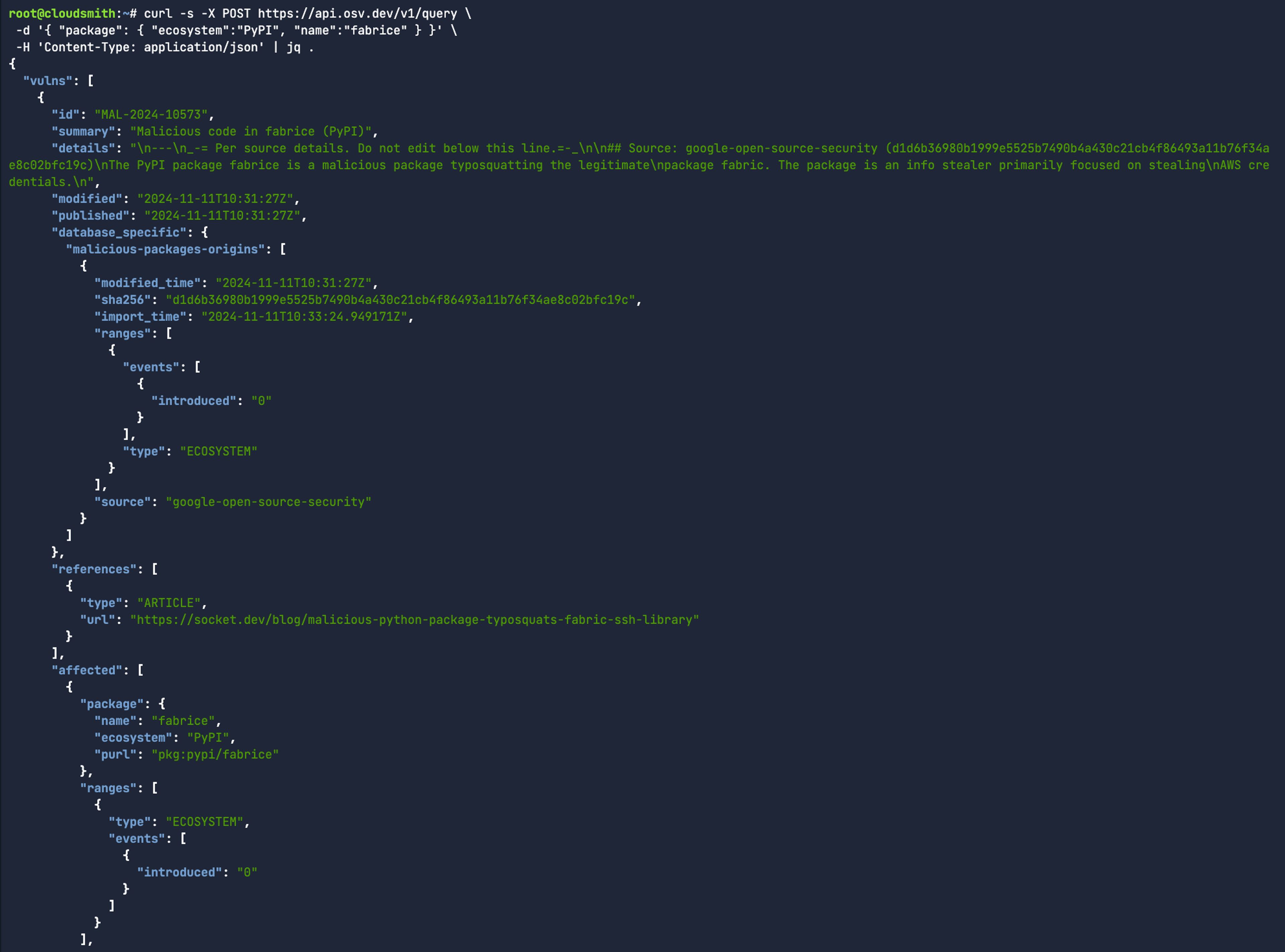

In the case of fabric, it’s correctly created by Jeff Forcier (AKA bitprophet). Whereas, the typosquatted package name returns “null” for the owner. You’ll have probably noticed by now that this package no longer exists on PyPi as it was intentionally created to cause harm - and has since been removed. While that’s good for the community, there’s a chance that an organisation may have already cached that package locally. In those cases, the OSV.dev public API can be really useful to identify whether your cached dependencies were created as part of a typosquatting campaign:

curl -s -X POST https://api.osv.dev/v1/query \

-d '{ "package": { "ecosystem":"PyPI", "name":"fabrice" } }' \

-H 'Content-Type: application/json' | jq .

So what’s all this got to do with generative AI? Well, GenAI is just as guilty of hallucinating and recommending package names that simply don’t exist. Adversaries pick up on the behaviour, and thus create more typosquatted upstream packages in the hope that “AI Slop” code generation recommends one of those typosquatted package names. This is commonly known as Slopsquatting. To stay ahead of slopsquatting, modern artifact management are expected to have full provenance checks on all software artifacts to understand who created them, are they credible, and have they already been flagged as compromised according to the OSV API.

Malware

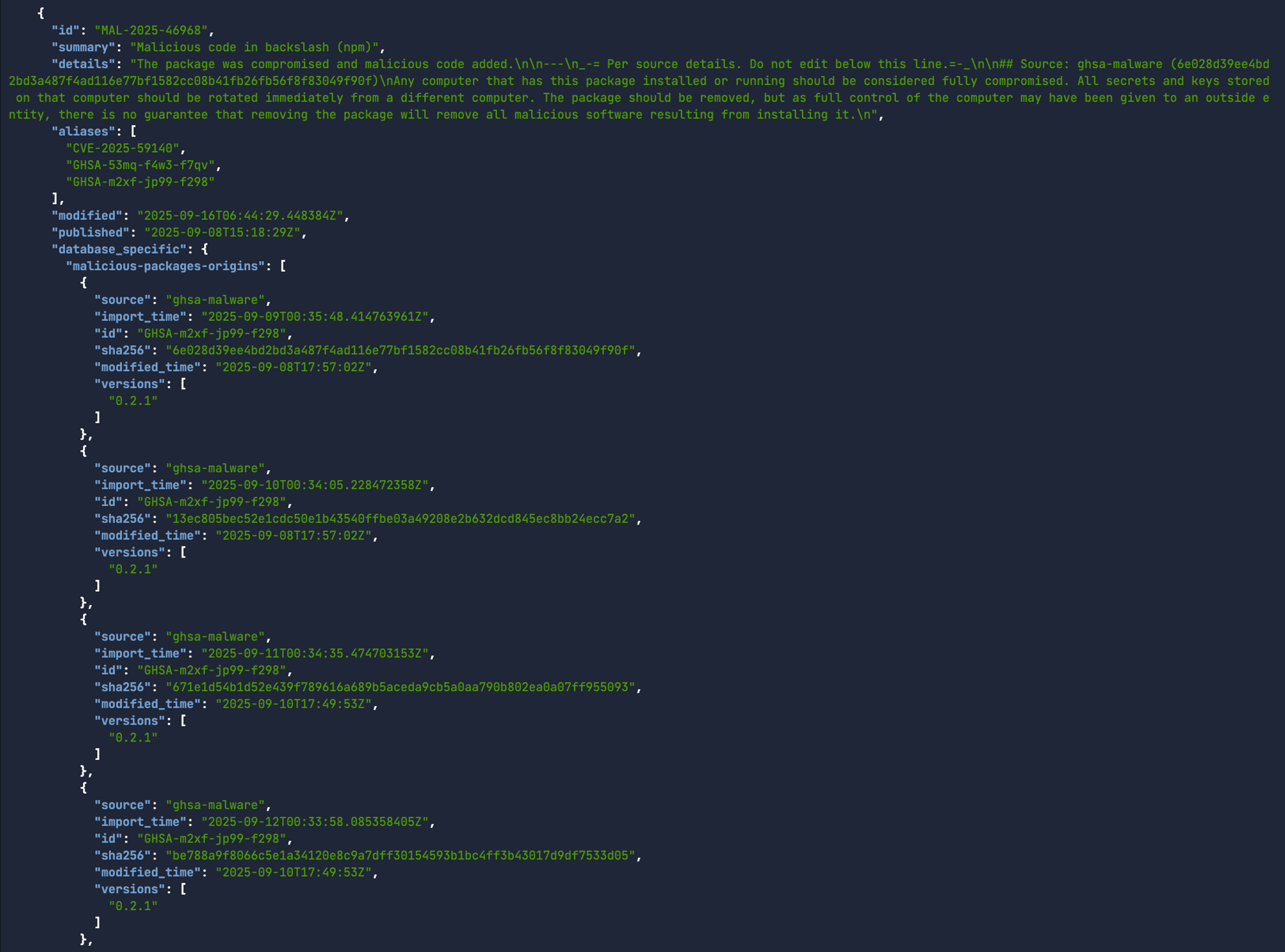

Malware, unlike vulnerabilities in software, is a dedicated piece of software that is specifically designed to disrupt, damage, or gain unauthorised access to a computer system. Traditional vulnerability scanners are looking for flaws in an application code that can be exploited. These are marked by CVEs (like CVE-2025-3248). However, malware contained within compromised upstream packages will not show-up in those CVE reports. Instead, we can use the same OSV.dev public API to see if an open-source upstream package, such as backslash v.0.2.1 on NPM, contains malware or not.

curl -s -X POST https://api.osv.dev/v1/query -d '{

"package": {

"ecosystem":"npm",

"name":"backslash"

},

"version":"0.2.1"

}' -H 'Content-Type: application/json' | jq .After running this command, you see that the package is identified as containing malware, with a unique identifier - MAL-2025-46968. Now you couldn’t be expected to run this manual action every time you want to check if a specific dependency and version contain malware, that would take all day. Thankfully, Cloudsmith integrates the OpenSSF Malicious Packages API directly within our product. So on package synchronisation, we will automatically scan for known Malware identifiers (ie: MAL-YEAR-ID) associated with the open-source software dependency. If there’s a match, we can quarantine this automatically through OPA policies.

Most recently, a variant of PhantomRaven malware was found in 126 NPM Packages, designed to steal GitHub Tokens from developers. If LLMs cannot quickly identify whether an upstream package is compromised or not, what stops these generative AI tools from suggesting compromised packages? As the prevalence of malware in upstream repositories increases, this simultaneously changes the demands being put on artifact management. Modern artifact management solutions need to be able to identify and prevent compromised open-source software packages from being used by developers.

Credibility

AI models used for code generation are trained on vast, uncurated datasets of public repositories, learning statistical patterns rather than semantic quality. This training methodology makes them incapable of assessing software provenance or maintenance hygiene. The model does not understand that a CNCF-hosted project undergoes rigorous security audits, has a dedicated maintenance team, and follows a clear governance model. To the AI, that project's code patterns may look statistically similar to an abandoned, single-maintainer package on GitHub that contains known CVEs or is a prime candidate for a typosquatting attack. This "credibility blindness" means the AI's suggestions are based on pattern frequency, not on a risk assessment of the dependency being recommended.

This blind spot directly reflects the current software supply chain risk. An AI can confidently recommend a dependency that, while functionally correct for the prompt, introduces significant security debt. This is backed up in the data. Chainguard’s "2026 Engineering Reality Report" found that 1 in 5 respondents said that their engineers are not allowed to use AI for some of the most time-intensive tasks, such as patching vulnerabilities, running tests, or conducting code reviews. These are the tasks where technical credibility is needed to make those final judgements.

Furthermore, AI models trained on these OSS software ecosystems will inevitably learn from and recommend historically compromised packages from those upstreams. This problem is recognised by security foundations like OWASP, whose "Top 10 for LLM Applications" lists risks like "Insecure Output Handling" (LLM03) and "Training Data Poisoning" (LLM04), where the model's output or its training data can be leveraged to inject vulnerabilities directly into a developer's workflow.

Ultimately, the credibility of these outputs can be benchmarked against frameworks such as the OpenSSF Scorecard. This project was built by open-source developers to help secure the critical projects the community depends on. It assesses open-source projects for security risks by running a series of automated checks. You can use it to proactively analyse the security posture of your own code and make better-informed decisions about acceptable risks. The tool also helps you evaluate other projects and dependencies, giving you a basis to work with LLMs on improvements before you integrate into your codebase.

Does this mean we should be discouraged from using AI-generated code?

The short answer is 'no,' but a cautious and deliberate approach is essential.

We view generative AI much like any other programmable interface. It excels at following instructions, which means the quality of the output is directly dependent on the quality of the input.

A developer who is skilled in their domain will understand how to instruct the AI to produce high-quality, relevant content, thereby accelerating their workflow. For example, knowing precisely which software packages and versions are appropriate for a production application prevents the AI from referencing and introducing compromised dependencies.

By providing specific context, such as example code, the application framework, established code styles, library usage, specific dependency versions, and error handling protocols, a developer can guide the AI. This ensures it follows established rules and helps produce code significantly faster than manual methods.

Regardless of popular sentiment, our view is that generative AI is not close to replacing human intelligence in software development. Its current, practical role is to speed up and facilitate the quality work humans do, ultimately increasing overall productivity.

This new power, however, requires a corresponding increase in diligence for software governance, provenance checks, and vulnerability scanning. Maintaining control over AI-generated code means knowing exactly what is running in the estate, who created it, and being confident whether it is safe or not. This is all achievable through a comprehensive artifact management platform. If you’d like to learn more about this, we have an upcoming webinar on the topic of slopsquatting in the age of GenAI.

More articles

Securing the intersection of AI models and software supply chains